Measuring feature adoption and usage: Metrics, funnels, and examples

Introduction

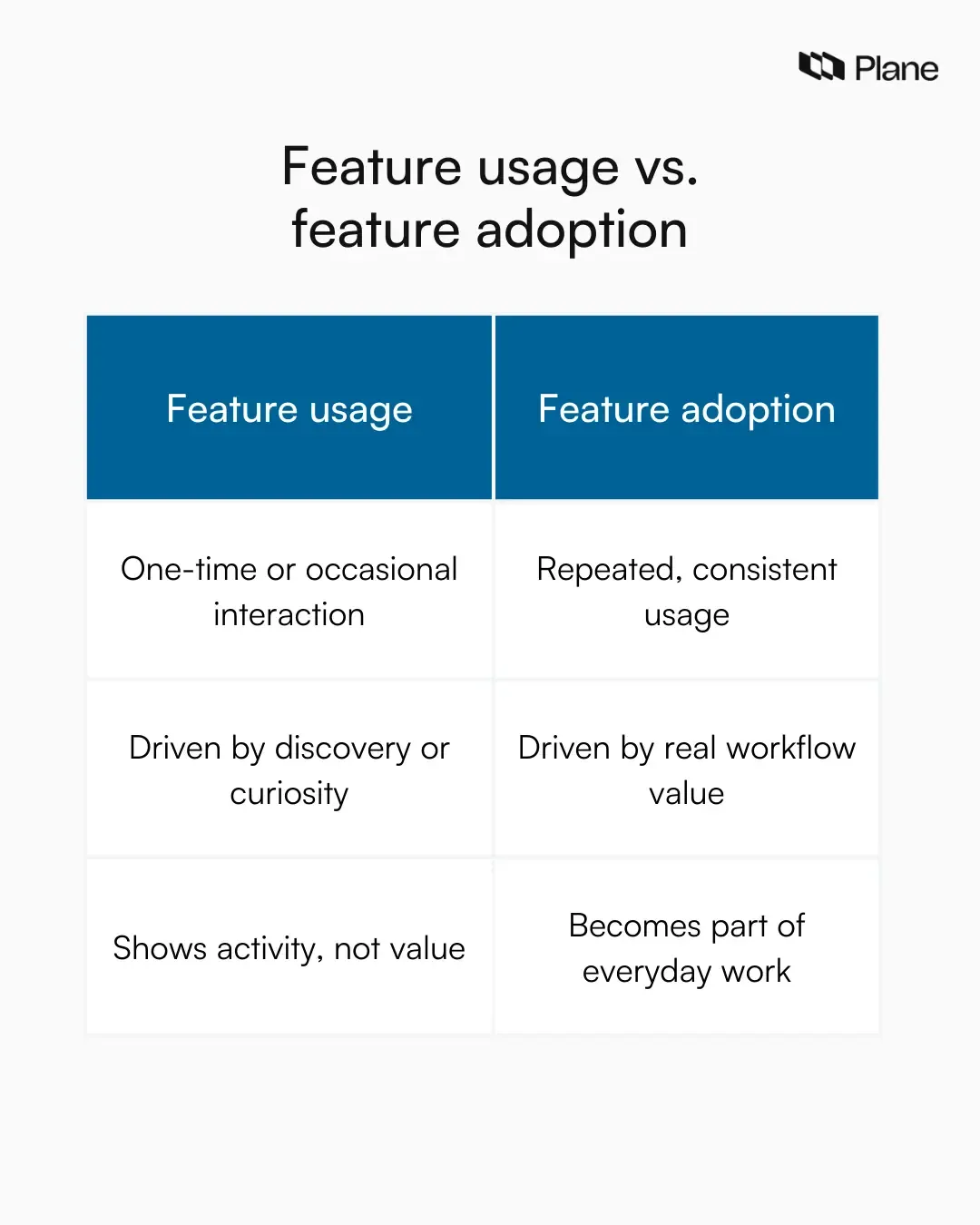

A team ships a long-awaited feature, celebrates the release, and moves on. Weeks later, usage looks flat, and no one knows why. Measuring feature adoption and usage helps close the gap between shipping work and creating value. Feature usage metrics show activity, but feature adoption metrics reveal whether a feature fits real workflows and earns repeat use. This guide explains how to measure feature adoption and usage using practical metrics and adoption funnels that reflect how products succeed in real environments.

What is feature adoption?

Feature adoption describes whether a feature becomes part of how users actually work. It goes beyond initial interaction and focuses on repeated, value-driven behavior over time.

This distinction matters because many teams rely solely on feature usage metrics and assume that activity equals success. In practice, activity and adoption describe very different outcomes.

Feature usage means activity

Feature usage refers to any recorded interaction with a feature. A user clicks a button, opens a view, or triggers an action once or twice. Feature usage metrics capture this activity and help teams understand exposure and engagement at a surface level. Usage answers a narrow question: Did someone interact with the feature during a specific period?

Usage alone does not explain whether the feature solved a real problem or supported an ongoing workflow. A feature can show healthy usage numbers even when users abandon it after the first attempt.

Feature adoption means repeated, value-driven behavior

Feature adoption measures whether users return to a feature because it helps them complete meaningful work. Adoption happens when a feature supports a clear job and fits naturally into existing workflows. Feature adoption metrics focus on repetition, consistency, and sustained value rather than one-time interaction.

When teams measure feature adoption, they look for signals such as consistent usage patterns, faster task completion, and reduced reliance on alternative tools. Adoption reflects trust and usefulness, not curiosity.

Why a feature can be used but not adopted

A feature often gets used without ever being adopted. This usually happens when discovery is easy, but long-term value is unclear. Users may try a feature because it is visible or new, then stop using it once friction appears, or expectations remain unmet.

Measuring feature adoption and usage together helps teams spot this gap. Feature usage metrics highlight initial interest, while feature adoption metrics reveal whether the feature earns a lasting place in everyday work. Understanding this difference helps product teams evaluate success accurately and avoid false confidence based on activity alone.

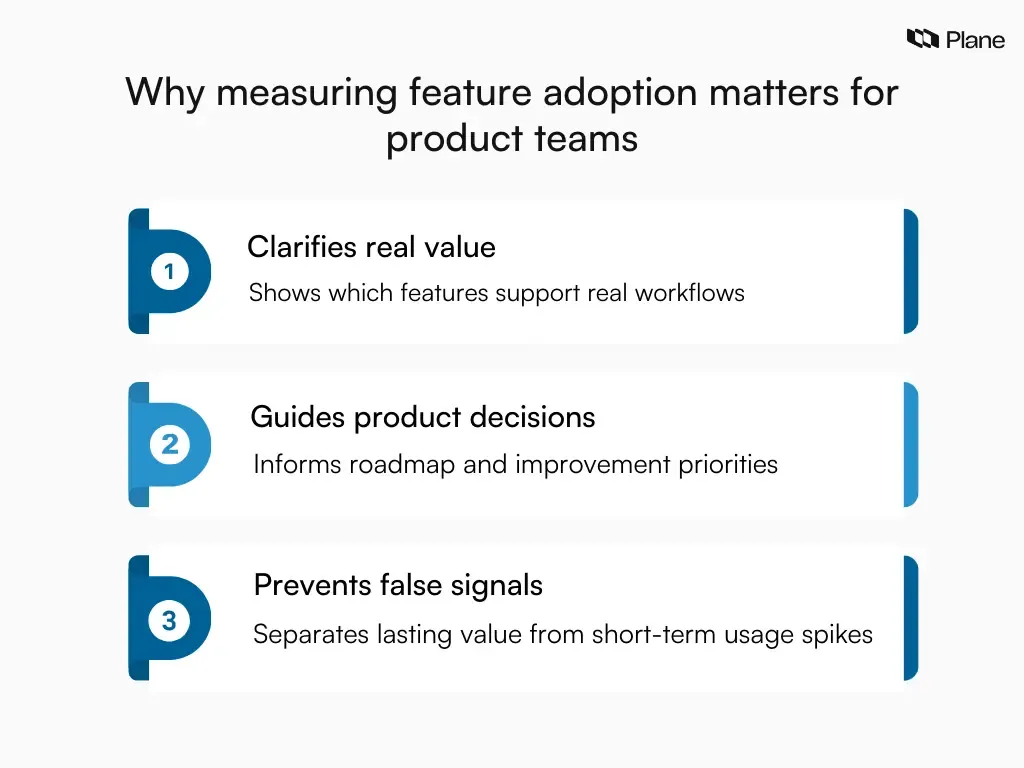

Why measuring feature adoption matters for product teams

Every product team ships features with an expectation. Users will find value and keep coming back. Measuring feature adoption shows whether that expectation holds up in real-world use. It gives teams a clear view of what actually works after launch, not just what looks active on the surface.

1. Identifying features that actually deliver value

Feature adoption metrics reveal which features are used repeatedly because they help users complete real work. When users return to a feature without prompts, it signals trust and usefulness. Measuring feature adoption and usage together helps teams identify features that create lasting value rather than short-term interactions. This clarity allows teams to focus their effort on features that strengthen the product experience.

2. Prioritizing improvements and roadmap decisions

Strong adoption data brings confidence to roadmap planning. Feature adoption measurement highlights where users struggle, where workflows break, and where small changes unlock meaningful gains. Teams can prioritize improvements that increase adoption rather than adding new features that repeat existing problems. This keeps roadmaps grounded in real behavior and user needs.

3. Avoiding false positives from surface-level usage data

Feature usage metrics often show activity that fades quickly. A feature may get explored, tested, and abandoned within days. Measuring feature adoption helps teams recognize this early. Adoption metrics focus on sustained usage and real impact, ensuring product success reflects long-term value rather than temporary spikes in activity.

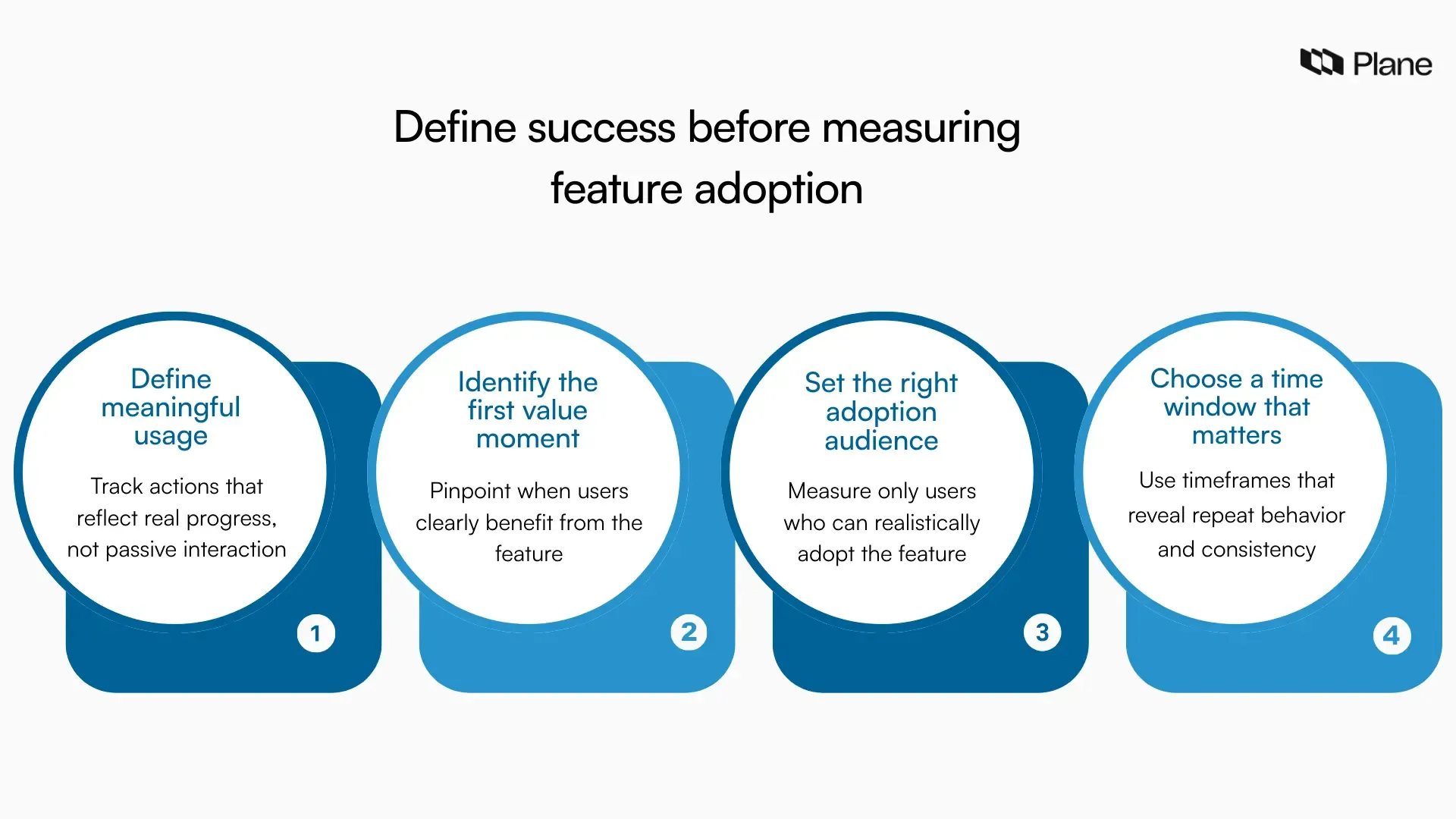

Define what success looks like before you measure anything

Most feature adoption metrics fail long before dashboards come into play. They fail at definition. Teams start tracking before they agree on what success actually looks like, which turns measuring feature adoption and usage into a debate over numbers rather than insight. Before tracking feature adoption and usage, teams need clarity on what success actually means. Let’s look at the four decisions that shape meaningful measurement.

- Start with usage: Opening a feature does not mean it is being used. Real usage shows up when a user completes an action that advances their work. If usage does not represent progress inside a workflow, feature usage metrics inflate activity without explaining value.

- Adoption begins even later: It starts when users return to a feature because it helped them once and trust it to help again. This first value moment marks the shift from exploration to reliance. Measuring feature adoption means tracking whether users reach that moment repeatedly and integrate the feature into how they work.

- Context matters just as much: A user cannot adopt what they cannot access or do not need. Roles, permissions, and responsibilities shape who is even capable of adopting a feature. Feature adoption measurement becomes meaningful only when metrics reflect this reality.

- Time is the final lens: Early behavior shows curiosity. Sustained behavior shows commitment. Short windows reveal first impressions, while longer windows expose whether adoption holds in real workflows. Choosing the right time window enables feature adoption and usage metrics to tell a clear story rather than produce scattered signals.

The feature adoption funnel: Turning metrics into a story

Measuring feature adoption and usage works best when metrics follow how users actually move through a product. The feature adoption funnel helps teams connect individual feature usage metrics into a sequence that explains user behavior from first exposure to regular use.

1. Awareness: Did users see or discover the feature

Awareness answers a simple but often overlooked question. Did users even notice the feature?

At this stage, teams look at signals such as:

- How many eligible users encountered the feature in their workspace

- Whether the feature appeared naturally within existing workflows

- Whether discovery depended on announcements or required extra effort

For example, a reporting feature might exist in the product, but if it sits behind multiple clicks or unfamiliar labels, awareness stays low. Weak awareness usually points to discoverability issues rather than feature quality.

2. Activation: Did users reach the first value

Activation shows whether curiosity turned into meaningful action. It captures the moment users try the feature and experience a clear benefit.

Product teams typically measure:

- Whether users completed the key action tied to the first value

- How many users dropped off before reaching that moment

- How long did it take users to reach the first value after discovery

For instance, if a new workflow automation requires several setup steps, users may start but never finish. Low activation signals friction, unclear value, or setup complexity rather than a lack of interest.

3. Usage: Are users applying it in real workflows

Usage reveals whether the feature fits into how users actually work. At this stage, feature usage metrics focus on consistency and context, not just frequency.

Teams often look for:

- Repeated use within relevant workflows

- Usage spread across real tasks rather than isolated actions

- Patterns that align with daily or weekly work cycles

A feature that sees occasional use during exploration but rarely appears in active projects often struggles to find a natural place in workflows.

4. Repeated usage: Is it becoming part of regular work

Repeated usage reflects true feature adoption. Users return because the feature saves time, improves clarity, or reduces effort.

Strong adoption shows up through:

- Consistent usage over longer time windows

- Reduced reliance on alternative tools or manual work

- Stable usage patterns across teams or accounts

For example, when teams repeatedly rely on the same feature to plan work or track progress, adoption has moved beyond experimentation. Measuring feature adoption at this stage helps teams understand long-term value and product impact.

Together, these stages turn feature adoption metrics into a narrative. Instead of asking whether a feature is successful, the funnel helps teams understand where momentum builds, where it slows, and where focused improvements can drive real adoption.

Core metrics to measure feature adoption and usage

Each feature adoption metric answers a different question about user behavior. Looking at them together helps product teams understand reach, depth, and long-term value, rather than relying on a single number.

1. Feature adoption rate

The feature adoption rate shows the percentage of eligible users who adopted a feature within a given time period. It provides a high-level view of uptake after release.

Teams usually look at:

- How many users reached the adoption action that signals value

- How many users were eligible to adopt the feature

- How adoption changes over time after launch

For example, if a new planning feature is available only to project managers, the adoption rate should be calculated using that group alone. This metric works best for tracking progress, comparing features, and spotting early adoption trends.

2. Breadth of adoption

Breadth of adoption explains how widely a feature is used across users or teams. It focuses on reach rather than intensity.

Teams often examine:

- How many unique users or teams used the feature

- Whether usage spreads across different roles or accounts

- Whether adoption remains limited to a small group

A feature used once by many teams often delivers broader value than a feature heavily used by a single team. Breadth helps teams understand whether a feature supports common workflows or serves niche needs.

3. Depth of adoption

Depth of adoption reflects how deeply users rely on a feature over time. It highlights intensity and consistency rather than reach.

Product teams typically track:

- How often users return to the feature

- How many actions do they complete within it

- Whether usage grows more advanced over time

For example, a reporting feature used occasionally for quick checks shows shallow depth, while the same feature used regularly for planning and reviews shows strong adoption. Depth helps identify features that become essential to daily work.

4. Time to adopt or time to value

Time to adopt measures how quickly users reach meaningful value after first exposure. It reveals how intuitive and accessible a feature feels.

Teams look for:

- How long does it take users to complete the first value action

- Where users slow down or abandon the process

- Differences in time to value across segments

A short time-to-value often signals clear positioning and a smooth onboarding experience. Longer timelines suggest friction, setup complexity, or unclear expectations. Improving this metric often leads to higher feature adoption.

5. Duration of adoption

Adoption duration indicates whether users continue using a feature weeks or months after adoption. It separates initial interest from lasting impact.

Teams usually analyze:

- Whether users continue using the feature over longer periods

- How adoption holds across different cohorts

- Whether usage drops after initial success

A feature that sustains usage over time usually supports recurring work. Measuring duration helps teams understand long-term value beyond launch metrics.

6. Feature exposure

Feature exposure measures how many eligible users had a real chance to discover the feature. It adds critical context to adoption numbers.

Teams track:

- How many users encountered the feature naturally

- Where discovery happened within workflows

- Whether exposure depended on announcements or guidance

Low exposure paired with low adoption often points to discoverability gaps. Exposure helps teams avoid misreading feature adoption metrics.

7. Drop-off and abandonment rate

Drop-off and abandonment rates show where users disengage within a feature workflow. They reveal friction points that block adoption.

Product teams examine:

- Where users stop before reaching the first value

- Which steps cause confusion or effort

- Patterns of repeated abandonment

For example, if many users abandon a feature during setup, the issue is likely due to complexity rather than relevance. This metric guides focused improvements.

8. Retention and stickiness impact

Retention and stickiness connect feature adoption to long-term product usage. They show whether adopted features influence ongoing engagement.

Teams evaluate:

- Whether users who adopt the feature return more often

- How feature adoption affects overall product usage

- Whether the feature supports core workflows

Features that improve retention usually solve repeat problems. Measuring this impact helps teams understand which features strengthen the product over time.

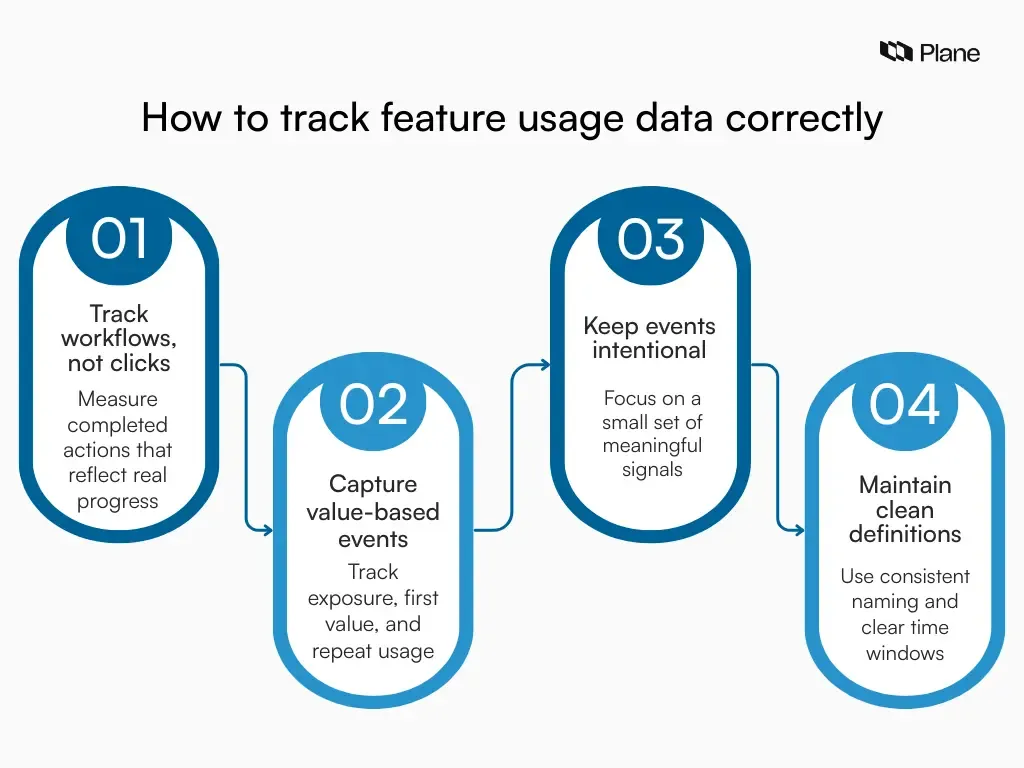

How to track feature usage data correctly

Good feature adoption metrics depend on how usage data is captured. When tracking focuses on isolated clicks or surface interactions, measuring feature adoption and usage produces noisy signals that fail to explain real behavior. Tracking should reflect how users move through workflows and reach value.

1. Track workflows, not isolated clicks

Clicks show interaction, but workflows show intent. A single click may reflect curiosity, while a completed workflow reflects progress. Feature usage tracking works best when events map to meaningful steps in how work gets done. For example, instead of tracking every interaction inside a planning feature, teams should track whether users completed the planning flow from start to finish. This approach keeps feature usage metrics aligned with real outcomes.

2. Capture events that signal adoption

Not all events matter equally. Effective feature adoption measurement focuses on a small set of signals that mark progress toward value.

Teams usually track:

- Exposure events that show users encountered the feature in context

- First value events that confirm users experienced a clear benefit

- Repeat usage events that indicate continued reliance

Together, these events form a clear picture of how users discover, try, and adopt a feature over time.

3. Avoid common tracking mistakes

Tracking breaks down when definitions and context are ignored. Teams often track too many events, mix eligible and ineligible users, or rely on raw counts without timeframes. These mistakes inflate feature usage metrics and hide adoption patterns.

Another common issue is inconsistent event naming across releases, which makes trends difficult to interpret. Clean instrumentation, consistent definitions, and clear time windows ensure that feature adoption and usage data remain trustworthy and actionable.

Segmenting adoption data to get meaningful insights

Feature adoption metrics become actionable only when they reflect who is adopting a feature and under what conditions. Aggregated numbers hide important patterns, while segmentation reveals why adoption succeeds in some contexts and stalls in others.

1. Segment by user roles or personas

Different roles use the same feature for different reasons. A project manager may rely on a feature daily, while an engineer may use it occasionally or in a limited context. Segmenting feature adoption and usage by role helps teams understand whether a feature supports the needs of its intended audience. It also prevents teams from misjudging adoption when a feature performs well for one persona but poorly for another.

2. Segment by account or lifecycle stage

User behavior changes as teams grow and mature. New accounts often explore features differently than long-standing ones. Segmenting by lifecycle stage helps product teams see whether adoption occurs during onboarding or only after users become familiar. This view highlights whether a feature delivers immediate value or requires deeper product understanding to adopt.

3. Segment by permissions or access levels

Access shapes adoption more than design alone. Users cannot adopt features they cannot see or use. Segmenting by permissions or plan level ensures that feature adoption metrics reflect realistic opportunities. This segmentation helps teams distinguish between adoption challenges and access constraints and avoid drawing incorrect conclusions.

4. Segment by team size or maturity

Team size and maturity influence how features are used. Smaller teams may adopt features quickly, while larger teams require coordination and process alignment. Segmenting adoption data by team size reveals whether a feature scales with complexity. This insight helps teams tailor improvements that support both early-stage and mature organizations.

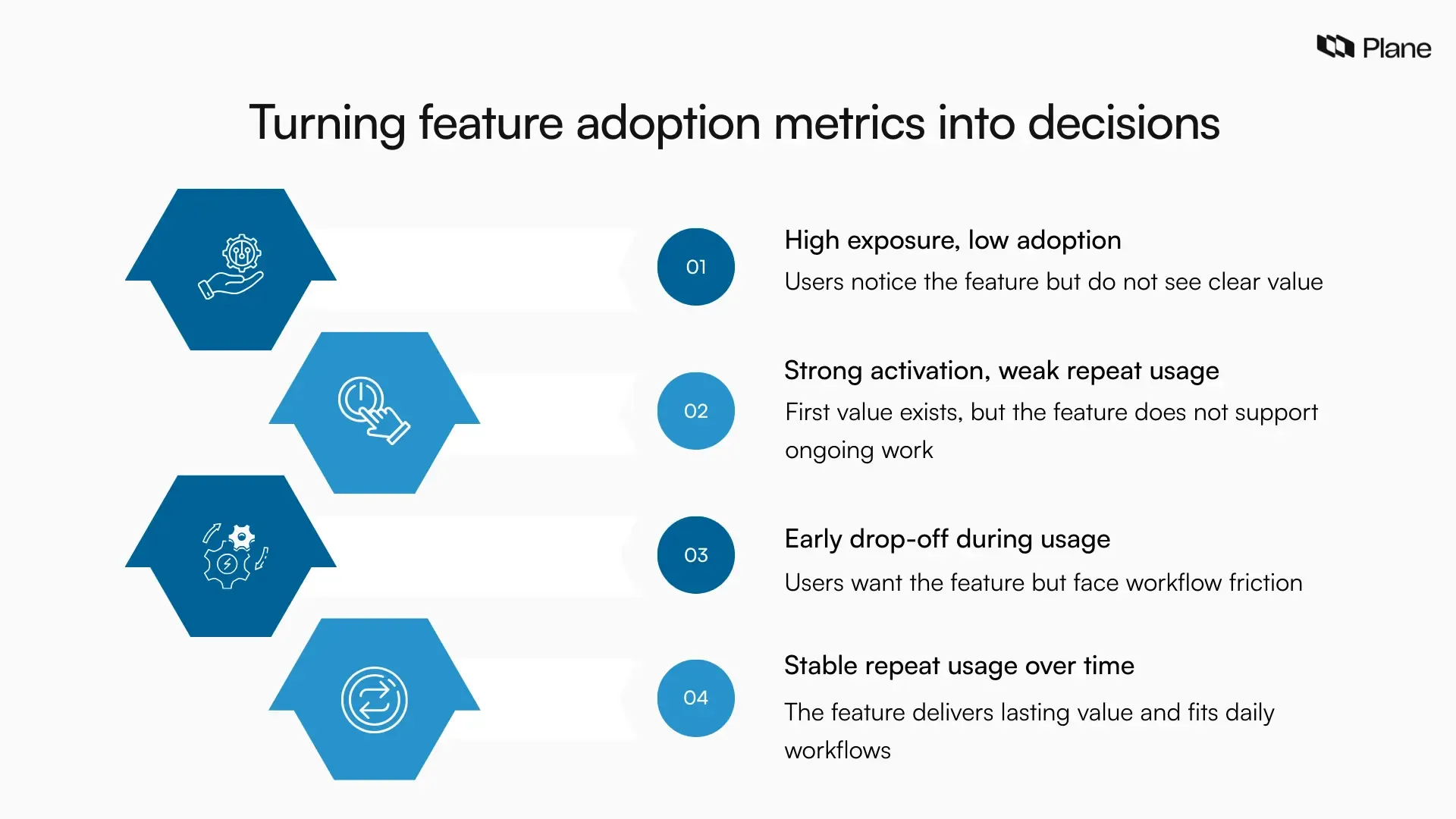

How to interpret feature adoption metrics and decide next steps

Feature adoption metrics start to matter only when teams pause and ask a simple question. What should we do differently because of what we are seeing? Interpretation bridges the gap between numbers and product decisions, helping teams act with confidence rather than instinct.

1. When exposure is high, but adoption stays low

This pattern shows up often. Users see the feature, but very few adopt it. In most cases, the issue is not a lack of awareness. It is clarity.

Teams should ask whether users understand what the feature is for when they encounter it. If discovery happens without context, users may notice the feature and move on. Sometimes the value appears too late, or the use case feels disconnected from daily work. Improving this scenario usually means tightening messaging, adjusting placement, or aligning the feature more closely with an existing workflow.

2. When activation looks strong, but repeat usage drops off

Strong activation feels encouraging at first. Users try the feature, reach the first value, and then disappear. This pattern suggests the feature worked once but did not earn a return visit.

Teams should look at whether the feature supports a recurring need or only a one-time task. If users rely on other tools after the initial use, repeat usage naturally fades. In many cases, improving repeat usage means reducing extra steps, smoothing handoffs, or making the feature easier to return to during everyday work.

3. Telling the difference between friction and lack of value

Adoption problems usually fall into one of two categories. Either users want to use the feature but struggle to complete it, or they complete it and decide it is not worth coming back to.

Friction shows up when users drop off early or abandon workflows midway. A lack of value occurs when users finish the workflow but still do not return. Looking at drop-off points and usage over time helps teams tell these two stories apart. Once the difference is clear, teams can decide whether to simplify the experience or rethink the feature’s role entirely.

How to improve feature adoption based on what you measure

Improving feature adoption starts with listening closely to what the data already tells you. Feature adoption metrics highlight where users hesitate, where they struggle, and where value fails to carry forward. The goal is not to add more features, but to remove barriers between users and meaningful outcomes.

1. Improving discoverability

When feature exposure remains low, users often miss the feature altogether or encounter it too late. Discoverability improves when features appear naturally within existing workflows rather than in isolated menus. Teams should look at where users already spend time and introduce the feature at moments when it makes sense. Clear labels, contextual entry points, and timely prompts help users recognize relevance without extra effort.

2. Reducing workflow friction

Friction surfaces when users start a workflow but fail to complete it. This usually points to unnecessary steps, unclear actions, or missing prerequisites. Reviewing drop-off and abandonment data helps teams pinpoint where effort outweighs value. Simplifying flows, removing optional steps, and clarifying next actions often lead to immediate improvements in feature adoption and usage.

3. Guiding users to the first value

First-value moments determine whether users return. When users reach value quickly, confidence builds, and adoption follows. Teams should focus on shortening the path to that moment by highlighting the most important action and minimizing setup. Clear guidance, sensible defaults, and focused onboarding experiences help users understand why the feature matters and how it fits into their work.

4. Reinforcing repeated usage through better workflows

Repeated use grows when features support ongoing work rather than one-time tasks. Reinforcement comes from making the feature easy to return to and useful across multiple scenarios. Teams should observe how users transition between tasks and ensure the feature integrates smoothly into those flows. When a feature consistently saves time or reduces effort, adoption strengthens naturally.

Final thoughts

Measuring feature adoption and usage gives product teams clarity once the release noise has faded. It shows which features make it into real workflows and which struggle to deliver lasting value. When teams move beyond surface-level feature-usage metrics and focus on adoption, decisions are grounded in how people actually work.

The real advantage comes from treating adoption measurement as an ongoing practice. Clear definitions, thoughtful tracking, and regular interpretation help teams learn faster and improve with intent. Over time, measuring feature adoption turns from a reporting task into a reliable way to build features that matter.

Frequently asked questions

Q1. What is feature adoption?

Feature adoption is the repeated use of a product feature because it delivers ongoing value to users. A feature is considered adopted when users rely on it regularly as part of their normal workflow, not just during initial exploration.

Q2. What is the difference between usage and adoption?

Feature usage measures whether users interacted with a feature at least once. Feature adoption measures whether users continue using the feature over time because it helps them complete meaningful work. Usage shows activity, while adoption shows sustained value.

Q3. What is the meaning of feature usage?

Feature usage refers to any interaction a user has with a feature within a specific time period, such as opening it, clicking an action, or completing a step. Feature usage metrics indicate exposure and interaction, but do not confirm long-term usefulness.

Q4. What is an example of feature adoption rate?

The feature adoption rate is calculated by dividing the number of eligible users who adopt the feature by the total number of eligible users. For example, if 40 of 100 eligible users regularly use a feature, the feature adoption rate is 40%.

Q5. What is the use of adoption?

Feature adoption helps product teams understand which features deliver real value, guide product improvements, and make better roadmap decisions. Measuring adoption ensures success is based on sustained user behavior rather than short-term activity.

Recommended for you